Big Model Tutorial

Techniques and Systems to Train and Serve Bigger Models

Abstract

In recent years, researchers in ML and systems have been working together to bring big models -- such as GPT-3 with 175B parameters -- into research and production. It has been revealed that increasing model sizes can significantly boost ML performance, and even lead to fundamentally new capabilities.

However, experimenting and adopting big models call for new techniques and systems to support their training and inference on big data and large clusters. This tutorial identifies research and practical pain points in model-parallel training and serving. In particular, this tutorial introduces new algorithmic techniques and system architectures for addressing the training and serving of popular big models, such as GPT-3, PaLM, and vision transformers. The tutorial also consists of a session on how to use the latest open-source system toolsets to support the training and serving of big models. Through this tutorial, we hope to lower the technical barrier of using big models in ML research and bring the big models to the masses.

Slides

(Download PDF)

Introduction Video

(Recordings of other sections can be found in the link above and will be uploaded to YouTube soon)

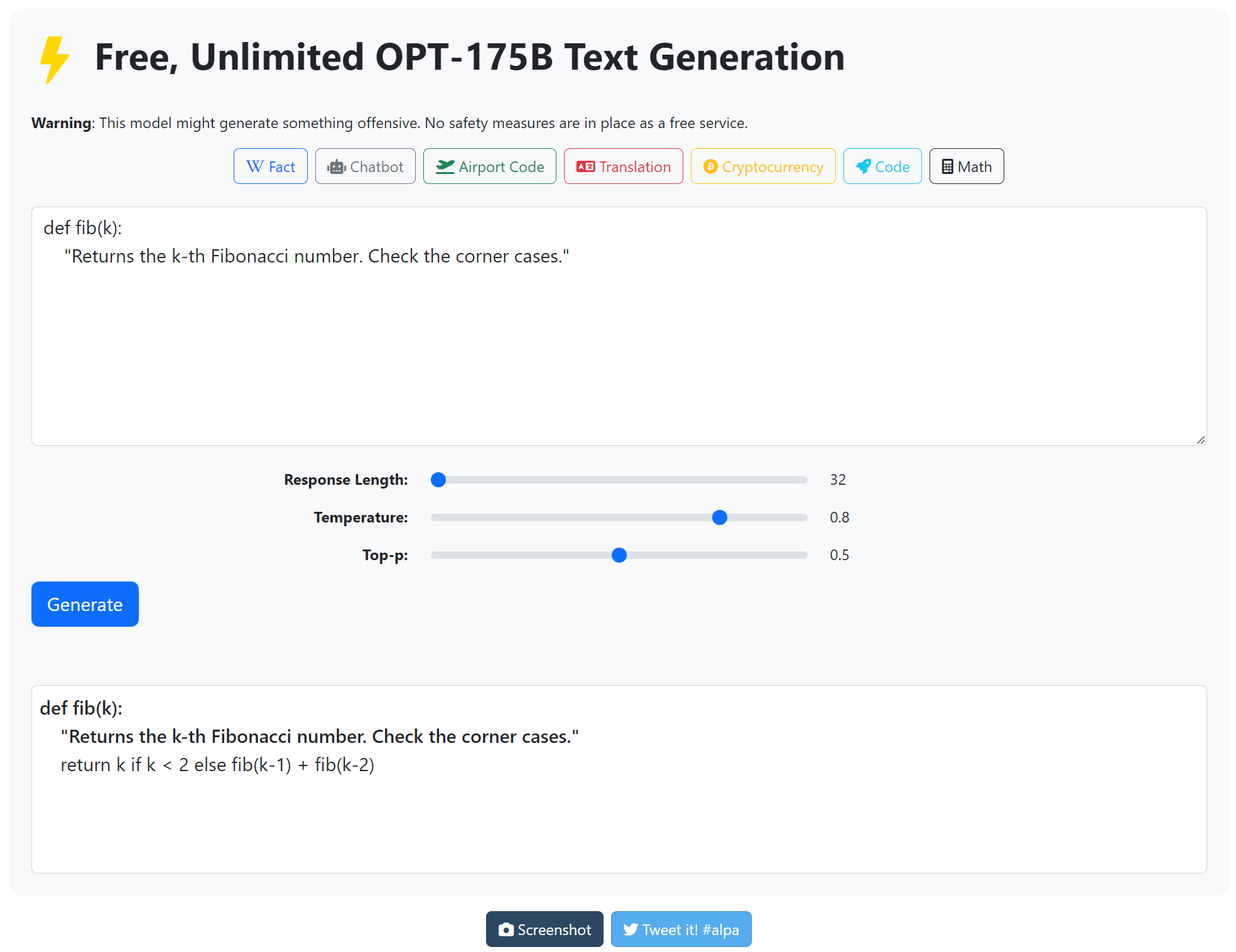

OPT-175B Prompting Demo

Try Alpa-hosted OPT-175B prompting at https://opt.alpa.ai/, no credit card needed!

Speakers

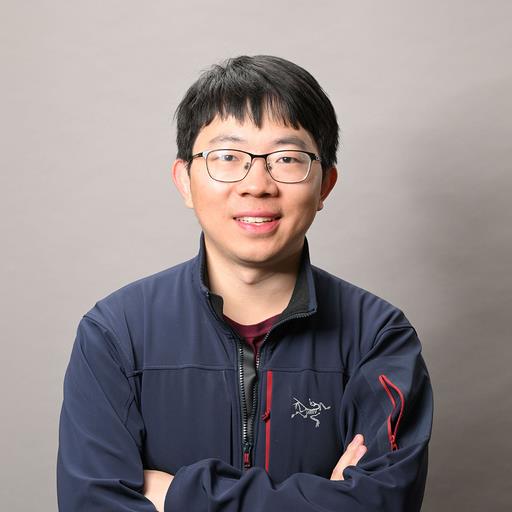

Hao Zhang is a postdoctoral researcher at UC Berkeley, working with Prof. Ion Stoica. He is recently working on building end-to-end composable and automated systems for large-scale distributed deep learning.

Lianmin Zheng is a Ph.D. student in the EECS department at UC Berkeley, advised by Ion Stoica and Joseph E. Gonzalez. His research interests lie in the intersection of machine learning and programming systems, especially domain-specific compilers for accelerated and scalable deep learning.

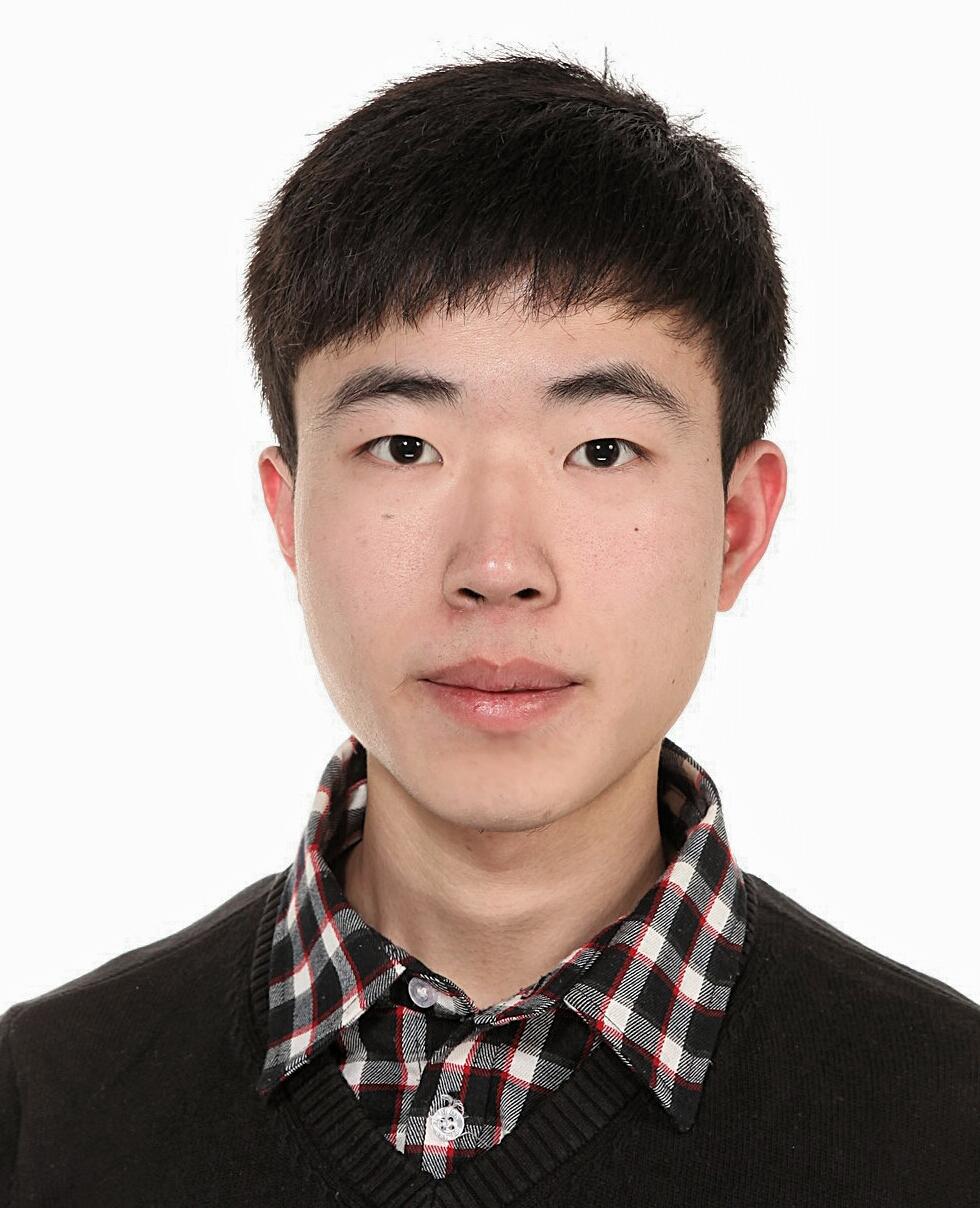

Zhuohan Li is a PhD student in Computer Science at UC Berkeley advised by Ion Stoica. His interest lies in the intersection of machine learning and distributed systems. He uses insights from different domains to improve the performance (accuracy, efficiency, and interpretability) of current machine learning models.

Ion Stoica is a Professor in the EECS Department at UC Berkeley. He does research on cloud computing and networked computer systems. Past work includes Apache Spark, Apache Mesos, Tachyon, Chord DHT, and Dynamic Packet State (DPS). He is an ACM Fellow and has received numerous awards, including the SIGOPS Hall of Fame Award (2015), the SIGCOMM Test of Time Award (2011), and the ACM doctoral dissertation award (2001). In 2013, he co-founded Databricks a startup to commercialize technologies for Big Data processing.